Topic 4. Linear Classification and Regression#

The following 5 articles may form a small brochure, and that’s for a good reason: linear models are the most widely used family of predictive algorithms. These articles represent our course in miniature: a lot of theory, a lot of practice. We discuss the theoretical basis of the Ordinary Least Squares method and logistic regression, as well as their merits in terms of practical applications. Also, crucial concepts like regularization and learning curves are introduced. In the practical part, we apply logistic regression to the task of user identification on the Internet, it’s a Kaggle Inclass competition a.k.a “Alice”.

Steps in this block#

1. Read 5 articles:

“Ordinary Least Squares” (same as a Kaggle Notebook);

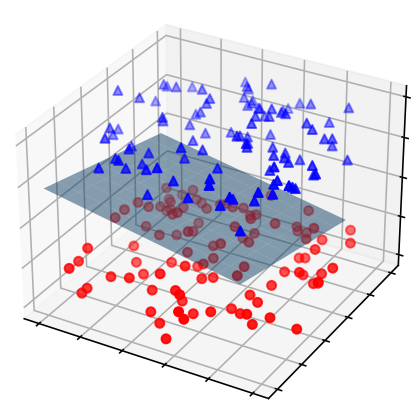

“Logistic Regression” (same as a Kaggle Notebook);

“Regularization” (same as a Kaggle Notebook);

“Pros and Cons of Linear Models” (same as a Kaggle Notebook);

“Validation and learning curves” (same as a Kaggle Notebook);

2. Watch a video lecture on logistic regression coming in 2 parts:

the theory behind logistic regression from ML perspective;

practical part, beating baselines in the “Alice” competition;

3. Watch a video lecture on regression and regularization coming in 2 parts:

the theory behind linear models, an intuitive explanation;

business case, where we discuss a real regression task – predicting customer Life-Time Value;

4. Complete demo assignment 4 (same as a Kaggle Notebook) where you explore OLS, Lasso and Random Forest in a regression task;

5. Check out the solution (same as a Kaggle Notebook) to the demo assignment (optional);

6. Complete Bonus Assignment 4 where you’ll be guided through working with sparse data, feature engineering, model validation, and the process of competing on Kaggle. The task will be to beat baselines in that “Alice” Kaggle competition. That’s a very useful assignment for anyone starting to practice with Machine Learning, regardless of the desire to compete on Kaggle (optional, available under Patreon “Bonus Assignments” tier).