Topic 6. Feature Engineering and Feature Selection#

Feature engineering is one of the most interesting processes in the whole of ML. It’s art or at least craft and is therefore not yet well-automated. The article describes the ways of working with heterogeneous features in various ML tasks with texts, images, geodata, etc. Practice with one more Kaggle competition is going to convince you how powerful feature engineering can be. And that it’s a lot of fun as well!

Steps in this block#

1. Read the article (same as a Kaggle Notebook);

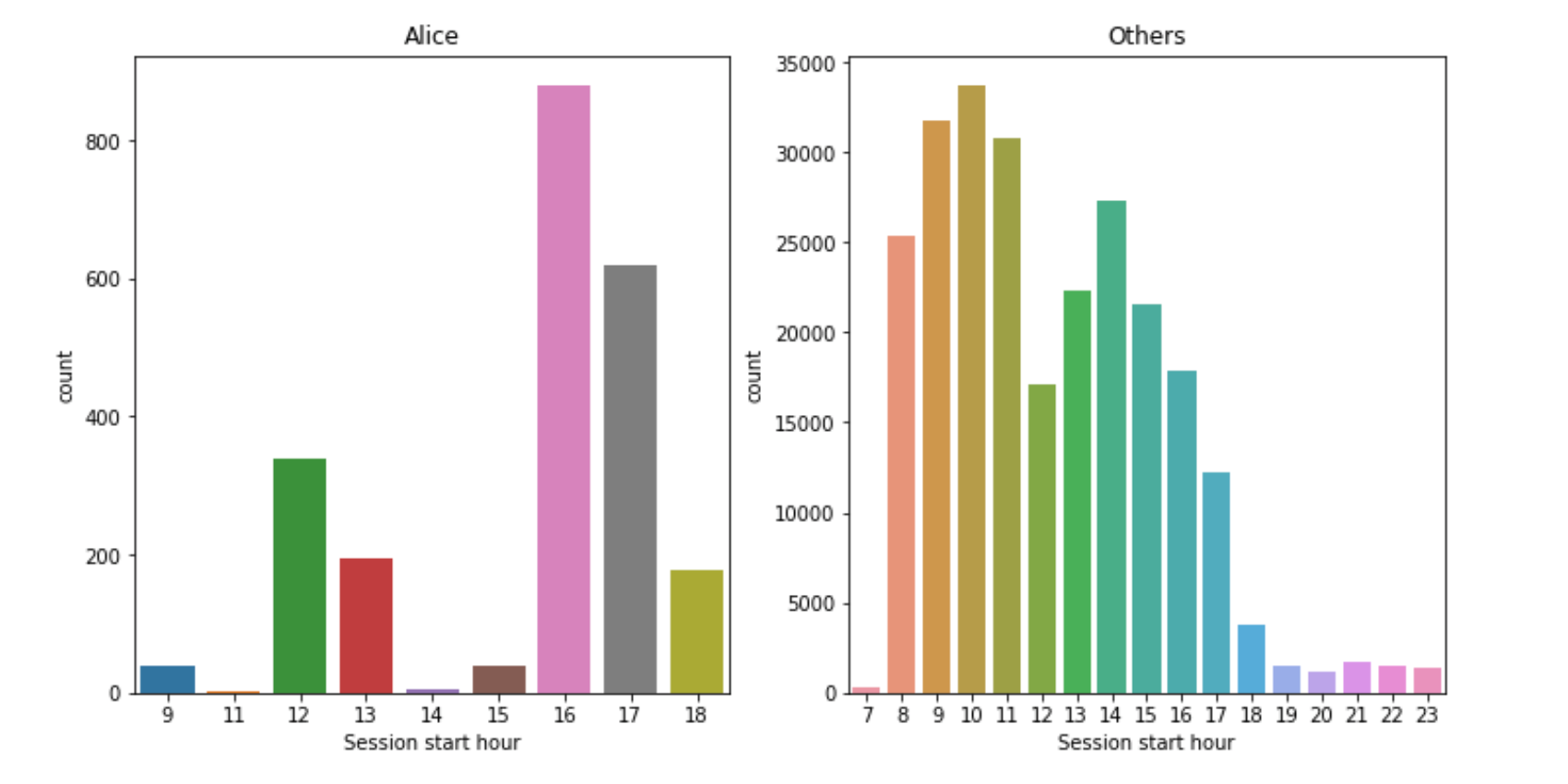

2. Kaggle: Following simple baselines in the “Alice” competition (see Topic 4), check out a bit more advanced Notebooks:

“Model validation in a competition”;

Go on with feature engineering and try to achieve ~ 0.955 (or higher) ROC AUC on the Public Leaderboard. Alternatively, if a better solution is already shared by the time you join the competition, try to improve the best publicly shared solution by at least 0.5%. However, please do not share high-performing solutions, it ruins the competitive spirit of the competition and also hurts some other courses which also have this competition in their syllabus;

3. Complete Bonus Assignment 6, where we walk you through beating a baseline in a competition where the task is to predict the popularity of an article published on Medium. The basic solution uses text only. But on the go, you’ll create a lot of additional features to improve the model. Also, in this assignment, you’ll learn some dirty Kaggle hacks (optional, available under Patreon “Bonus Assignments” tier).